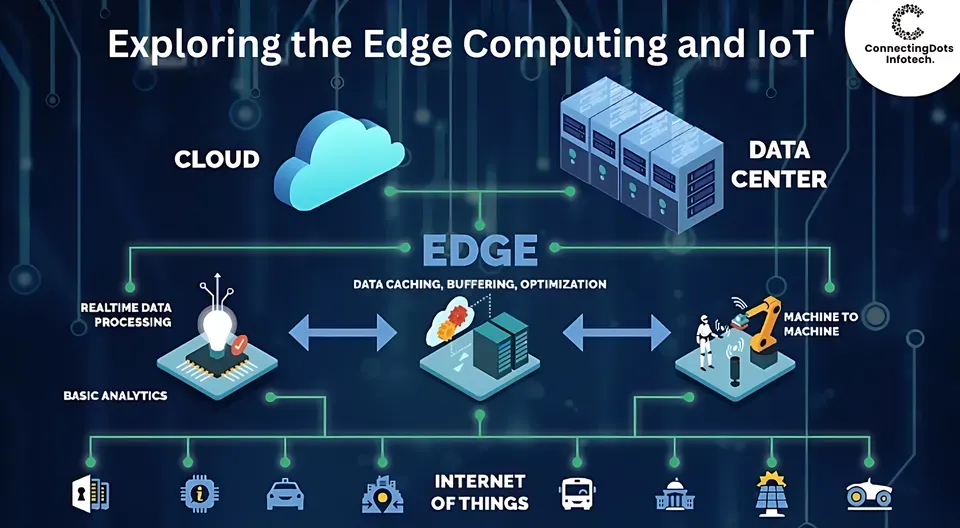

Edge Technology is not just a buzzword; it represents a practical shift in where and how we process data. From edge computing to IoT edge devices, this approach brings computing, storage, and analytics closer to the data source. It enables real-time data processing at the edge, delivering lower latency, reduced bandwidth use, and improved privacy even when connectivity is spotty. By moving critical tasks closer to devices and sensors, organizations can enhance security through edge security measures and local decision-making. As industries adopt this hybrid model, the approach becomes a foundational layer for faster decisions and more resilient operations.

Viewed through the lens of decentralized computing, this paradigm pushes computation toward the devices and sensors that generate data. It emphasizes near-data processing, where lightweight analytics and AI inference run on local gateways, micro data centers, or embedded nodes. By distributing workloads across the network edge, organizations can maintain privacy, reduce backhaul, and support resilient operations even when cloud connectivity is interrupted. The strategy also aligns with concepts like edge AI, secure edge networking, and continuous local monitoring, which together enable responsive actions without always reaching for the central cloud. In essence, this shift translates to a distributed, near-site architecture that unlocks faster insights and smarter environments across industries, reflecting Edge Technology in practice.

Edge Technology: Accelerating Real-Time Decision Making at the Edge

Edge Technology turns computation into a distributed, proximity-based model where computing, storage, and analytics sit close to data sources—the devices and sensors that generate information. This approach leverages edge computing, IoT edge devices, and real-time data processing at the edge to reduce backhaul to distant data centers and deliver faster insights.

Because data is processed near its source, latency drops, bandwidth is conserved, and privacy improves since sensitive information may not leave the local environment. This is valuable in manufacturing lines, autonomous vehicles, and remote sensors where milliseconds matter. The result is a hybrid architecture that combines the responsiveness of edge computing with the scalability of centralized analytics in the cloud.

Edge Computing Foundations: From Devices to Micro Data Centers

The core stack begins with edge devices and micro data centers—the physical endpoints that perform computation near the data source. These range from small embedded boards and rugged gateways to compact micro data centers perched near factories or stores. Their defining characteristics are low latency, high reliability, and robustness in challenging environments.

At the software level, local data processing and analytics run on the edge to prepare, filter, and analyze data without ferrying raw streams upstream. Edge networking and orchestration ensure workloads move smoothly between devices, gateways, and regional hubs, while maintaining secure, fault-tolerant operation even with intermittent connectivity.

Edge AI and Inference: On-Device Intelligence for Fast Insights

Edge AI enables running AI models directly on edge devices, delivering real-time decisions where data is created. Efficient runtimes, quantization, pruning, and hardware acceleration push inference to the edge, letting organizations react quickly without pinging the cloud. This on-device intelligence is ideal for sensor arrays, cameras, and industrial equipment.

By keeping inference at the edge, organizations reduce data transfer, protect privacy, and improve resilience against network outages. On-device learning and model adaptation can be performed in a privacy-preserving way, while centralized training in the cloud updates models for periodic retraining.

Edge Security in a Distributed Landscape: Protecting Data at Scale

The distributed nature of edge deployments expands the attack surface, making edge security a first-class concern. A layered approach combines hardware-based protections, secure boot, encrypted communications, identity and access management, and zero-trust principles across nodes. Data minimization and on-device processing help limit exposure of sensitive information.

Organizations should enforce regular patching, secure software supply chains, anomaly detection, and rapid incident response across the distributed edge. Governance, audits, and strict configuration management ensure consistent controls from device to gateway to central cloud.

Hybrid Architectures: Balancing Edge and Cloud for Scalable Analytics

Edge technology sits alongside cloud services in a tiered architecture. Critical, time-sensitive tasks run at the edge, while heavier analytics, historical analysis, and global coordination happen in the cloud. This balance preserves the speed of edge computing with the scalability and long-term storage of centralized platforms.

Data orchestration and pipelines move results from edge nodes to cloud-based systems, enabling centralized analytics without overwhelming network resources. The synergy between edge and cloud supports smarter decisions, more efficient data governance, and flexible deployment strategies across IoT edge devices.

Practical Roadmap to Deploy Edge Technology: From Pilot to Production

A practical deployment begins with clear goals and success metrics, focusing on time-sensitive use cases, latency targets, and privacy requirements. Start with a pilot on a single line or regional site to validate edge computing choices before expanding to more IoT edge devices and locations.

Then define references architecture, hardware, and software platforms, including edge-native runtimes and orchestration. Build governance, security into the design, measure latency reductions and bandwidth savings, and iterate toward production-scale rollouts with scalable monitoring and incident response.

Frequently Asked Questions

What is Edge Technology and how does it enable real-time data processing at the edge?

Edge Technology refers to computing, storage, and analytics that occur close to data sources, using edge devices, micro data centers, and local networks. This proximity enables real-time data processing at the edge, reducing latency and lowering bandwidth needs. Organizations often blend edge and cloud workflows, keeping time-sensitive tasks at the edge while heavier analytics run in the cloud. Key components include edge devices, local data processing, edge AI inference, and edge networking.

How does edge security fit into an Edge Technology strategy?

Edge security is critical because the edge expands the attack surface across distributed nodes, devices, and networks. A defense-in-depth approach combines hardware protections, secure boot chains, encrypted communications, identity and access management, and zero-trust principles applied across distributed nodes. Because data at the edge can be sensitive and subject to varying regulatory regimes, emphasize local processing and selective synchronization with cloud systems to support privacy and compliance. Regular patching, secure software supply chains, and rapid incident response are essential.

What role does edge AI play in the Edge Technology stack?

Edge AI enables running AI models at the edge to support real-time decisions without sending data to the cloud. This requires efficient runtimes and model optimization techniques such as quantization and pruning, plus hardware accelerators for edge inference. On IoT edge devices and other edge hardware, edge AI delivers faster, private insights and reduces data transfer while maintaining accuracy.

How do IoT edge devices fit into the Edge Technology architecture?

IoT edge devices are the physical endpoints that perform computation near the data source, ranging from small embedded boards to rugged industrial gateways. They deliver low latency and reliable operation in challenging environments, enabling local data processing and analytics. These devices are complemented by local processing software and, when appropriate, seamless integration with cloud services for broader analytics and long-term storage.

How does edge computing compare with cloud computing and on-device processing?

Edge computing drastically reduces latency and bandwidth use compared with cloud-first processing, while on-device processing offers near-instant results but may be limited by device capabilities. A hybrid approach balances these strengths: perform time-sensitive tasks at the edge, send only relevant results to the cloud for deeper analytics, and preserve privacy by local data handling where possible. This trade-off highlights the core benefits and limitations of Edge Technology.

What are best practices for adopting Edge Technology in an organization?

Follow a phased implementation roadmap: define goals and success metrics, start with a pilot, select architecture and hardware, invest in edge-native software platforms, and build governance and security into the design. Scale thoughtfully in waves, monitor latency reductions and bandwidth savings, and continuously measure business impact. Key practices include robust edge security (hardware protections, secure boot, encrypted channels, IAM, and zero-trust), interoperability through standards, data governance with data minimization, and reliable orchestration across distributed edge nodes.

| Topic | Key Points |

|---|---|

| What is Edge Technology? | Computing, storage, and analytics near data sources; reduces need to send data to central data centers; enables lower latency, reduced bandwidth, improved privacy, and operation with intermittent connectivity. |

| Why Edge Technology matters | Hybrid approach: critical tasks run at the edge while batch processing/learning runs in the cloud; combines responsiveness with scalability. |

| Core components | Edge devices/micro data centers; local data processing; Edge AI/inference engines; edge networking/orchestration; cloud integration and centralized analytics. |

| Key benefits | Low latency, reduced bandwidth usage, improved privacy, resilience, and the ability to act quickly with real-time insights. |

| Speed and latency | Faster real-time responses; lower backhaul traffic; enables time-critical decision-making. |

| Security considerations | Distributed threat landscape; hardware protections, secure boot, encrypted communications, identity and access management, zero-trust; data minimization and on-device processing. |

| Real-world use cases | Manufacturing (predictive maintenance), Healthcare (local vital signs analysis), Smart cities/transportation, Retail (real-time fraud detection), Agriculture (soil/climate monitoring). |

| Implementation roadmap | Define goals; start with a pilot; select architecture/hardware; invest in edge-native software; build governance/security; scale; measure and iterate. |

| Edge vs cloud trade-offs | Latency, bandwidth, privacy, complexity, and cost; edge offers a middle ground between cloud-centric and fully on-device approaches. |

Summary

The table above summarizes the key points about Edge Technology, highlighting its proximity-based compute model, hybrid cloud-edge dynamics, core components, benefits, security considerations, real-world use cases, implementation steps, and trade-offs with cloud and on-device solutions. The content emphasizes how edge computing enables low latency, bandwidth efficiency, and privacy, while balancing complexity and governance through a phased adoption roadmap.