Cloud to Edge deployment is redefining how organizations process data by bringing computation closer to where it is produced, enabling faster, more contextual decisions. By blending cloud-scale analytics with edge computing capabilities, teams can accelerate insight and improve operational responsiveness for today’s data-rich environments. This approach supports a broad range of scenarios by reducing data travel, enabling smarter devices, and delivering faster feedback loops. It fosters a hybrid ecosystem where lightweight workloads run at the edge while heavier analytics reside in the cloud. As enterprises adopt this pattern, they gain resilience, lower bandwidth costs, and more responsive experiences across a spectrum of applications.

Using LSIs, you can frame the concept with alternative terms that resonate across audiences and search engines. Think of it as a hybrid cloud–to–edge continuum, where computation happens near the source and data stays closer to devices. Other descriptors include edge-centric computing, near-data processing, and distributed computing at the edge, each underscoring locality, privacy, and reduced network strain. This phrasing preserves the core idea while expanding the semantic reach, helping readers and crawlers connect the dots between cloud-native analytics and on-site intelligence.

Cloud to Edge Deployment: A Hybrid Framework for Modern IT

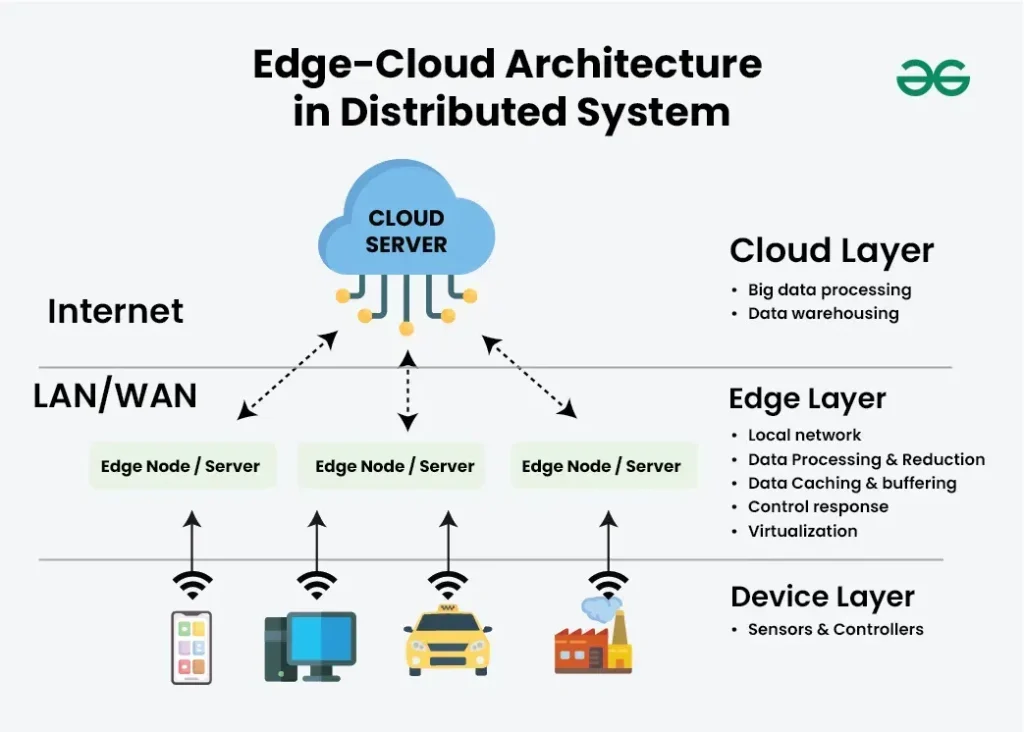

Cloud to Edge deployment represents a hybrid framework that blends centralized cloud capabilities with localized edge resources. This approach leverages edge computing to bring compute closer to data sources, while still tapping into cloud-scale analytics for deeper insights. By combining cloud-edge integration with IoT deployment patterns, organizations can orchestrate workloads across a continuum, achieving faster responses and smarter decisions at the point of data creation.

In practice, this framework means light or medium workloads run at the edge, with heavier analytics and long-running models housed in the cloud. The result is a symbiotic ecosystem where edge inference and data preprocessing feed summarized results to the cloud, and in return, the cloud delivers model updates, governance, and orchestration commands back to the edge. This architecture enhances latency reduction and bandwidth efficiency while maintaining data governance and security across the edge infrastructure.

Edge Computing for Latency Reduction and Real-Time Insights

Edge computing shifts processing closer to where data is produced, enabling real-time insights and deterministic responses. By performing critical computations at or near the data source, organizations reduce round-trips to centralized data centers, resulting in substantial latency reduction for time-sensitive applications such as autonomous systems, industrial automation, and smart devices.

This immediacy unlocks new possibilities across industries, from responsive manufacturing floors to adaptive retail experiences. Edge computing complements cloud analytics by handling the initial data filtering, event detection, and local decision making, while the cloud handles aggregation, historical analysis, and policy updates. Together, they create a resilient, low-latency foundation for modern digital operations.

Cloud-Edge Integration Patterns for Scalable IoT Deployment

A variety of cloud-edge integration patterns can be combined to scale IoT deployment effectively. The central cloud with distributed edge nodes pattern keeps heavy analytics in the cloud while edge sites perform data collection and local processing, reducing data egress and enhancing responsiveness.

Fog computing and micro edge layers expand this concept by deploying multiple small compute nodes near sensors, forming a fog of compute resources that preprocess data before cloud transmission. Edge-first analytics with cloud-backed orchestration further optimizes bandwidth by sending only anomalous events or aggregated insights, while containerized edge workloads enable portable, secure, and scalable deployments across sites.

Building Robust Edge Infrastructure for Industrial IoT

A solid edge infrastructure comprises intelligent edge devices and gateways, compact data centers, and reliable networking near the data source. Edge devices collect data, perform preprocessing, and support latency-sensitive decisions, while edge gateways manage connectivity and data routing to the broader ecosystem.

To manage scale, organizations often deploy containerized workloads at the edge using lightweight orchestrators like K3s, enabling consistent deployment, updates, and health monitoring across many sites. A well-designed edge infrastructure supports offline operation, secure data exchange with the cloud, and resilient failover, ensuring continuity in industrial IoT deployments even when connectivity is intermittent.

Security, Privacy, and Governance in Edge-Centric Architectures

Security must be embedded at every layer of the edge-centric architecture. A zero-trust mindset, secure boot, signed updates, encrypted data in transit, and robust threat monitoring are essential as edge devices proliferate across sites. Data residency and encryption policies help protect sensitive information while complying with regulatory requirements.

Governance becomes more complex in distributed environments. Implement auditable data flows, centralized visibility, and policy-driven access controls to maintain trust across cloud-edge environments. Regular patching, incident response planning, and observability across edge sites are critical to maintaining security, reliability, and compliance in an IoT deployment.

Roadmap to Cloud to Edge Adoption: From Assessment to Scale

A practical adoption roadmap begins with assessing workloads to identify latency-sensitive and bandwidth-intensive tasks suitable for edge processing. Map data flows, privacy constraints, and regulatory requirements to define a reference architecture that balances local processing with cloud analytics.

Next, select technologies and platforms that align with current ecosystems, pilot in controlled environments, and roll out in phased waves across sites. Establish robust security controls, observability, and KPIs for latency, data savings, uptime, and model accuracy to guide scale and optimization as you expand cloud-edge integration across the organization.

Frequently Asked Questions

What is Cloud to Edge deployment, and how does it drive latency reduction in an IoT deployment?

Cloud to Edge deployment distributes workloads across a continuum from centralized cloud data centers to edge nodes near data sources. This arrangement reduces latency by processing time-sensitive data locally at the edge, sending only summarized results to the cloud for deeper analytics, and enabling faster, real-time decisions in IoT deployment scenarios.

How does cloud-edge integration enable real-time insights at the edge in a Cloud to Edge deployment?

Cloud-edge integration connects edge infrastructure with cloud services for orchestration, policy management, and deeper analytics. By running lightweight analytics and inference at the edge and transmitting only essential results to the cloud, organizations gain real-time insights with reduced data transfer and improved responsiveness.

Which architectural patterns commonly support Cloud to Edge deployment in IoT deployments?

Common patterns include a central cloud with distributed edge nodes for heavy analytics, fog computing and micro edge layers to preprocess data, edge-first analytics with cloud-backed orchestration, containerized edge workloads with lightweight orchestration, and data-centric security with policy-driven deployment to enforce governance at the edge.

What security considerations are critical for Cloud to Edge deployment across edge infrastructure?

Security should be top-of-mind across edge infrastructure: adopt a zero-trust approach, enforce secure boot, use signed updates, encrypt data in transit and at rest, maintain auditable logs, and implement data residency and governance policies to protect privacy and ensure compliance in distributed environments.

How can latency reduction be maximized with edge-first analytics in a Cloud to Edge deployment?

Latency reduction is maximized by deploying analytics and AI inference at the edge, leveraging hardware accelerators, and sending only anomalous events or aggregated insights to the cloud. Offline-first strategies and robust edge caching further improve responsiveness when connectivity to the cloud is limited.

What role does orchestration play in managing edge infrastructure for Cloud to Edge deployment?

Orchestration coordinates containerized workloads at the edge, often using lightweight Kubernetes variants like K3s, to enable consistent deployment, updates, and health monitoring across many edge sites. This ensures scalable, secure management of the edge infrastructure and seamless policy enforcement.

| Key Point | Description | Patterns / Examples | Benefits / Implications |

|---|---|---|---|

| What is Cloud to Edge deployment? | Architectural practice distributing workloads across a continuum—from centralized cloud data centers to distributed edge nodes near data sources; it complements the cloud rather than replaces it, focusing on latency-sensitive, bandwidth-intensive, and privacy-conscious workloads; edge handles local processing and fast decisions while the cloud handles deeper analytics and orchestration. | Central cloud with distributed edge nodes; Fog computing and micro edge layers; Edge-first analytics with cloud-backed orchestration; Containerized edge workloads with orchestration; Data-centric security and policy-driven deployment | Enables real-time insights, reduces data egress, and improves resilience and user experiences across industries. |

| Why now? | Latency requirements are increasingly critical; sending every data point to the cloud is often impractical due to bandwidth and reliability constraints; privacy and data sovereignty laws push processing to the edge; advances in edge hardware, containers, and orchestration enable scalable edge deployments. | Latency-sensitive use cases (autonomous systems, industrial automation, real-time personalization); bandwidth/connectivity limits; privacy/compliance needs; edge hardware and lightweight Kubernetes (e.g., K3s) | Faster insights, reduced cloud costs, improved regulatory compliance, and greater resilience. |

| Central cloud with distributed edge nodes patterns | Central cloud with distributed edge nodes; Fog computing; Edge-first analytics with cloud-backed orchestration; Containerized edge workloads; Data-centric security and policy-driven deployment | Hybrid benefits: lower latency, data locality, and reduced data egress. | |

| Fog computing and micro edge layers | Tiered compute near data sources forming a ‘fog’ to preprocess before cloud; multiple small compute nodes support large IoT deployments and multi-site installations. | Fog computing; micro-edge layers; data filtering at edge | Improved scalability, data quality, and network efficiency. |

| Edge-first analytics with cloud-backed orchestration | Edge devices run lightweight analytics and inference; only anomalous events or aggregated summaries are sent to the cloud for deeper analytics, dashboards, and model updates. | Edge gateways; cloud-backed orchestration | Faster local decisions, reduced data transfers, and lighter cloud processing load. |

| Containerized edge workloads with orchestration | Edge workloads run in containers (often with lightweight Kubernetes like K3s) for portability, security, and centralized management across sites. | K3s; edge-friendly orchestrators | Consistent deployments, easier updates, and scalable management. |

| Data-centric security and policy-driven deployment | Security-first patterns ensure data residency, encryption, and access controls at the edge, with secure channels back to cloud for updates and governance. | Zero-trust; encryption; secure boot; patching; auditable logs | Stronger compliance, trust, and governance across distributed environments. |

| Edge devices and gateways | Sensors, cameras, industrial controllers, and gateways collect data and perform initial processing; closest to the data source for latency-sensitive decisions. | Edge sensors and gateways | Low-latency decisions and offline resilience. |

| Edge infrastructure | Edge servers or micro data centers near data sources; provides compute, storage, and connectivity for local workloads. | Edge data centers; micro data centers | Localized compute reduces backhaul and enhances resilience. |

| Orchestration and management | Robust management plane for deployment, updates, and health monitoring across many edge sites; containerized workloads. | Lightweight Kubernetes (e.g., K3s); edge orchestration tools | Operational efficiency and fleet-wide governance. |

| Data pipelines and streaming | Efficient data movement between edge and cloud; messaging protocols (MQTT, AMQP), data buses, and streaming platforms. | MQTT, AMQP; streaming platforms | Reliable, timely data transfer supporting synchronized analytics. |

| AI and inference at the edge | Deploy trained models to edge devices for real-time inference; accelerators (GPUs, TPUs, or edge AI chips) boost throughput. | Edge AI accelerators; on-device inference | Low-latency, privacy-preserving inference with resilient operation. |

| Security, governance, and policy | Identity management, encryption, secure boot, patching, and auditable logs are essential to trust in a distributed edge-centric architecture. | Zero-trust; encryption; patch management; auditable logs | Regulatory compliance and trusted distributed operations. |

| Benefits overview | Latency reduction, bandwidth optimization, enhanced reliability, privacy and data sovereignty, and scalable hybrid architectures. | Organizational value across industries and use cases. | |

| Industry use cases | Industrial IoT/manufacturing; Smart cities/transportation; Healthcare; Retail; Energy/utilities. | Industry examples | Demonstrated benefits include operational efficiency, safety, and improved customer experiences. |

| Challenges and best practices | Selective offloading, strong security, governance; offline-first strategies; observability; resource management and orchestration. | Security at scale; data governance; reliability and update management; observability; resource management | Guided implementation with risk mitigation and reliable deployments. |

| Roadmap to implementing Cloud to Edge deployment | Assess workloads; define reference architecture; select technologies; pilot in controlled environments; phased rollout; implement security; establish observability; scale and optimize. | Stage 1–8: assess, architecture, tech, pilot, rollout, security, observability, scale | Provides a practical, milestone-driven path to adoption. |

| The future of Cloud to Edge deployment | Evolving toward autonomous orchestration, stronger edge AI, and deeper 5G/6G integration; standardization and governance around distributed intelligence. | Autonomous orchestration; edge AI advancements; 5G/6G integration | Sets expectations for scalable, intelligent, and secure distributed systems. |

Summary

Cloud to Edge deployment represents the evolution of modern IT architectures, blending cloud-scale analytics with edge-computing resilience to deliver real-time insights and smarter experiences. By placing compute and inference closer to data sources, organizations can reduce latency, save bandwidth, and strengthen data governance while maintaining the cloud’s power for heavy analytics, orchestration, and long-term storage. This distributed approach supports industrial automation, intelligent applications, and responsive customer experiences across industries, and it requires thoughtful patterns, clear data policies, robust security, and scalable management to succeed in practice.