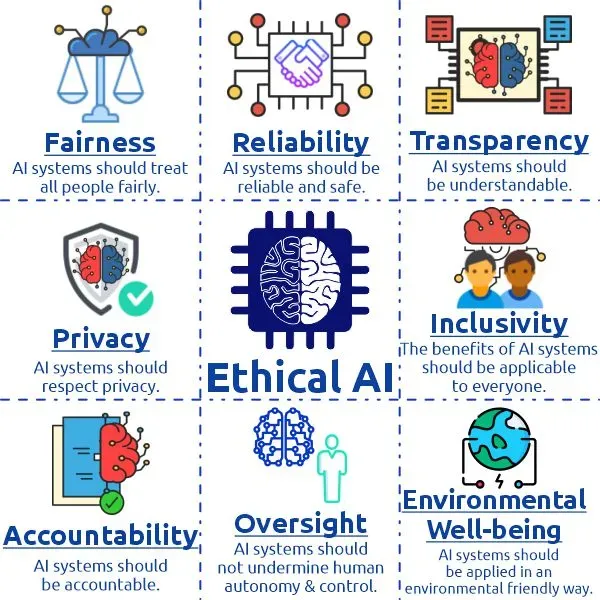

AI and automation ethics is the compass guiding how organizations pursue smarter processes while safeguarding people, data, and trust within increasingly automated operations that touch every corner of the workplace. From governance that ties performance to responsible AI and automation to rigorous, risk-aware measurement grounded in AI productivity ethics, organizational leaders must balance throughput with fairness, safety, and rights, weaving safeguards from design, through testing, into deployment and ongoing operation. As workplaces become more AI-enabled, the challenge is not only how to do more, but how to do it responsibly, ensuring data quality, privacy protections, inclusive design, and clear accountability, automation ethics in the workplace, so that automation augments rather than erodes trust that endures beyond initial implementation and scales with organizational learning. This introductory discussion draws on practical governance models, transparent practices, explainability, bias auditing, and sustained stakeholder dialogue to illustrate how balancing productivity and ethics in AI can yield durable gains for customers, employees, regulators, and the broader ecosystem. By weaving governance into strategy and day-to-day operations, organizations can unlock scalable value while respecting dignity, autonomy, and safety, creating a framework where speed, reliability, and accountability coexist through continuous cross-functional collaboration across legal, compliance, IT, product, and operations, with ongoing learning.

To introduce the topic through related terms, this paragraph shifts from a single label to a spectrum of concepts around ethical AI deployment and responsible automation. Related ideas include trustworthy AI systems, governance of intelligent automation, privacy-preserving analytics, and bias-aware design that collectively support reliable performance. From a Latent Semantic Indexing perspective, terms such as AI productivity ethics, automation ethics in the workplace, responsible AI and automation, balancing productivity and ethics in AI, and ethics of AI automation map onto the same underlying concerns: governance, fairness, and accountability. By presenting the topic through these connected lenses, readers can see how policy choices, design decisions, and ongoing monitoring translate big ideas into concrete, trustworthy practices.

Governance That Aligns AI Productivity Ethics with Organizational Values

Effective governance sits at the intersection of speed and ethics. By establishing a cross-functional governance body that includes data science, IT, legal, compliance, and business stakeholders, organizations can translate strategic values into concrete AI practices. This framework embodies AI productivity ethics, ensuring that performance gains do not outpace commitments to fairness, safety, and rights. Governance becomes the compass that guides how, where, and why AI is deployed across functions, from customer service to supply chain planning.

A robust governance model defines ethical principles, assigns clear ownership for AI assets, and requires periodic reviews of both performance and impact. Regular audits, risk assessments, and regulatory alignment help ensure that automation projects are not pursued solely for throughput. Instead, governance channels accountability, transparent decision-making, and a loop of continuous improvement that respects people and data while still delivering value.

Balancing Speed and Fairness: Ethics of AI Automation in Daily Operations

The pursuit of productivity can be meaningful only when it harmonizes with ethical considerations. Substantial gains in throughput and decision speed must be matched with deliberate attention to fairness, explainability, and user trust. This is where balancing productivity and ethics in AI becomes a practical discipline, not a theoretical ideal, and why automation ethics in the workplace matters for everyday operations.

Beyond metrics like latency or volume, organizations should track indicators of bias, data quality, and user satisfaction. By integrating responsible AI and automation practices into performance dashboards, teams can identify unintended harms early and adjust models, data sources, or interfaces accordingly. The result is a more resilient, trusted system that delivers value without eroding stakeholder confidence.

Data Stewardship: Privacy, Bias, and Transparency as Core Pillars

Privacy and data protection are foundational to sustainable automation. As automation relies on rich data sets, principled data handling, access controls, data minimization, and robust anonymization become non-negotiables. Building privacy-by-design into every stage helps technology operate with confidence and reduces the risk of misuse. This emphasis on privacy supports broader efforts in responsible AI and automation by aligning technical capability with societal expectations.

Bias and transparency go hand in hand with data stewardship. AI systems learn from historical data, which can reflect inequities. Regular bias audits, inclusive design processes, and transparent model documentation help ensure fair, justifiable decisions. When stakeholders can understand how a system arrives at a result, accountability follows naturally, reinforcing trust in automation rather than skepticism about it.

Human-Centered Design and Explainability in Automation

Human-centered design places people at the center of AI-enabled workflows. Interfaces should present AI-assisted recommendations in a clear, interpretable way, enabling users to understand the rationale and to challenge or override automated outputs when appropriate. This focus on explainability is a cornerstone of the ethics of AI automation and supports a more collaborative human–machine dynamic.

In practice, user-centric design pairs technical rigor with accessible communication. Model documentation, transparent interfaces, and intuitive controls help maintain trust, especially in high-stakes contexts where critical choices matter. By weaving explainable AI into the fabric of product development and operations, teams uphold responsible AI and automation while preserving the benefits of automation.

Human in the Loop, Accountability, and Trust

Not every decision should be fully automated, particularly in high-stakes areas. A human-in-the-loop approach preserves essential human judgment, providing oversight while capturing productivity gains. This stance embodies responsible AI and automation by balancing machine speed with human insight and moral responsibility.

Accountability frameworks ensure that responsibility is traceable and actionable when issues arise. Clear remediation paths, decision logs, and audit trails help institutions demonstrate governance and compliance. With robust human oversight and transparent accountability, organizations can sustain trust even as automation scales across processes.

Measuring, Certification, and Continuous Improvement in AI Ethics

Measuring ethics alongside performance requires dashboards that monitor fairness, drift, and user feedback in addition to traditional metrics. Continuous monitoring supports AI productivity ethics by revealing when models begin to diverge from expected behavior and enabling timely interventions. Certification programs for AI systems—covering data provenance, model interpretability, and safety testing—help buyers and users assess governance maturity and reliability.

Looking ahead, standards bodies, industry consortia, and regulatory developments will shape how organizations demonstrate ethical competence. Continuous improvement means embracing feedback loops, updating guardrails, and validating ethical assumptions through pilots and real-world deployment. In this dynamic landscape, AI ethics evolves from a one-time policy into a living capability that sustains both productivity and societal trust.

Frequently Asked Questions

What is AI productivity ethics and why is it important in automation?

AI productivity ethics focuses on achieving throughput and speed without sacrificing fairness, safety, or privacy. It emphasizes measuring value beyond speed—such as accuracy, explainability, and bias mitigation—and it reinforces governance and responsible AI practices to ensure automation remains trustworthy and sustainable.

How does governance support automation ethics in the workplace?

Governance provides an accountable structure across data science, IT, legal, compliance, and business teams to oversee AI projects. It defines ethical principles, assigns clear ownership, and requires ongoing reviews of performance and impact, addressing automation ethics in the workplace and ensuring alignment with values and regulations.

What is responsible AI and automation, and how should it guide design decisions?

Responsible AI and automation means building systems with guardrails, transparency, and human oversight to prevent harm and bias. It emphasizes data governance, risk assessment, explainability, and inclusive design, guiding product development and operations toward trustworthy, ethically aligned outcomes.

How can organizations implement strategies for balancing productivity and ethics in AI?

To balance productivity and ethics in AI, adopt a repeatable framework: establish governance, perform risk and impact assessments, invest in data quality, prioritize explainability, use human-in-the-loop where appropriate, and implement continuous monitoring. This approach ensures efficiency gains do not come at the expense of fairness or safety.

Why are transparency and interpretability critical in the ethics of AI automation?

Transparency and interpretability help stakeholders understand how automated decisions are made, building trust and accountability. Practices like explainable AI, thorough model documentation, and clear user interfaces enable responsible use and easier remediation when issues arise in the ethics of AI automation.

What practical steps help mitigate bias and protect privacy in ethics of AI automation?

Practical steps include bias audits, privacy-by-design principles, robust data governance, data minimization, strict access controls, and ongoing monitoring. These measures address the ethics of AI automation by reducing unfair outcomes while preserving trust and compliance.

| Topic | Key Points |

|---|---|

| The Productivity Imperative in AI and Automation |

|

| Ethical Dimensions: Privacy, Bias, Transparency, and Accountability |

|

| Balancing Productivity and Ethics: Practical Frameworks |

|

| Responsible AI and Automation in Practice |

|

| Real-World Contexts: Healthcare, Finance, and Manufacturing |

|

| Risks, Trade-offs, and Mitigation Strategies |

|

| The Path Forward: Standards, Certification, and Continuous Improvement |

|

Summary

The table above synthesizes the key points from the base content, highlighting how productivity and ethics intersect across governance, privacy, bias, transparency, and accountability in AI and automation.