Edge Computing vs Cloud is reshaping how organizations handle data at the source, influencing architectural decisions, device-level processing, and the daily experiences of users who expect instant insights, even when networks are imperfect or intermittently connected, which forces IT leaders to reevaluate security footprints, data locality policies, and vendor partnerships. In practice, the choice affects where workloads run, how data moves, and how latency reduction with edge computing is achieved, with policies traveling across the edge, gateways, and centralized data centers, making this a strategic lever for competitive advantage as IT scales, and its influence extends to developer workflows, monitoring strategies, and the balance of privacy, compliance, and performance across diverse geographic regions. Cloud computing differences become clearer as enterprises weigh centralized scalability, governance, and the breadth of cloud-native services, while distributed edge nodes emphasize locality, fault tolerance, and domain-specific optimization, and organizations evaluate long-term total cost of ownership, upgrade paths, and ecosystem compatibility. To navigate these dynamics, many enterprises adopt a hybrid cloud strategy that stitches together on-premises, edge devices, regional gateways, and public cloud services into a coherent continuum, with clear data pipelines, governance, and automation to avoid silos, while aiming to place latency-sensitive workloads near sources and preserve scalability, reliability, and ongoing compliance across multiple jurisdictions. Organizations can further strengthen outcomes by implementing robust data governance and security practices that span the edge and the cloud, addressing data sovereignty and security requirements, encryption in transit and at rest, and consistent identity and access policies that work across architectures; this integrated approach helps meet regulatory demands while enabling faster decision cycles and more resilient operations, supported by continuous assurance, audits, and adaptive risk management.

Another way to frame the topic is to look at it as a balance between local processing and centralized cloud services, with computing performed near data sources or in distant data centers depending on needs. You will see related terms like proximal computing, on-site processing, distributed computing, edge-to-cloud orchestration, and cloud-native analytics used interchangeably to map similar capabilities. This semantic approach helps search engines and readers understand the relationship between edge devices, gateway hardware, regional data hubs, and global cloud platforms. In short, the same decision is expressed across different terms that emphasize locality, governance, and scalability.

Edge Computing vs Cloud: Defining Proximity, Latency, and Scale

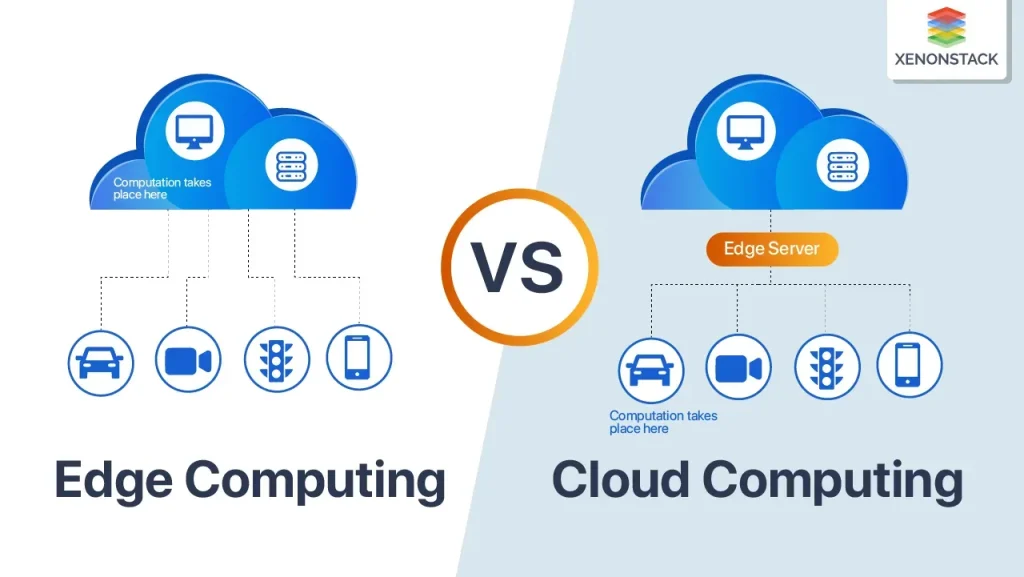

Edge computing brings processing closer to where data is produced—on devices, gateways, or local edge servers—while cloud computing centralizes work in remote data centers. This proximity changes the economics of speed, delivering latency advantages by shortening data travel times and enabling near real-time actions. Understanding these dynamics is essential to decide when to move workloads toward the edge versus toward centralized clouds. The edge computing advantages include faster feedback, reduced bandwidth use, and local autonomy that empower responsive operations.

However, proximity also introduces complexity: distributed management, local security, and edge-to-cloud data synchronization become critical design concerns. This is where a hybrid cloud strategy often emerges as a pragmatic answer, letting latency-sensitive tasks run at the edge while the cloud handles long-running analytics and archival storage. Viewed through the lens of Edge Computing vs Cloud, organizations can map workloads to business outcomes while balancing speed, scale, and governance.

Latency Reduction with Edge Computing: Real-Time Outcomes and Bandwidth Savings

Latency reduction with edge computing translates into immediate, local responses. When processing happens near the data source—on sensors, gateways, or edge servers—systems can react within milliseconds, enabling real-time control for industrial automation, autonomous devices, and responsive user experiences. This capability underpins the edge computing advantages by removing round trips to distant data centers and accelerating decision-making.

Beyond speed, processing at the edge reduces backhaul bandwidth and cloud egress costs. By performing filtering, aggregation, and inference locally, only meaningful summaries or anomalies travel upstream, keeping raw data at the edge. This supports a hybrid cloud strategy that uses edge for immediate tasks and the cloud for deeper analytics and long-term storage, delivering scalable, cost-efficient architectures while preserving data integrity.

Hybrid Cloud Strategy: Orchestrating Edge and Cloud for Flexible Performance

A hybrid cloud strategy frames the architecture as a continuum rather than a binary choice. Workloads are placed where they perform best—edge for latency-sensitive processing and cloud for scalable analytics and storage—while ensuring consistent policies and data flows across sites. This approach provides flexibility, accelerates time-to-value, and supports industry-specific needs such as regulatory compliance and data governance.

Effective orchestration requires clear interfaces, data pipelines, and governance rules to prevent drift between edge and cloud datasets. The hybrid model benefits from centralized visibility, standardized security controls, and automation (infrastructure as code, CI/CD for edge software) that keep distributed environments aligned. In short, a hybrid cloud strategy enables organizations to scale intelligently while maintaining control over performance and risk.

Data Sovereignty and Security at the Edge: Balancing Local Control with Global Compliance

Data sovereignty and security considerations are central to any Edge Computing vs Cloud decision, especially where regulations require sensitive data to remain within specific jurisdictions. Edge processing lets organizations keep raw or regulated data locally, reducing exposure to external data centers and supporting localization mandates while still enabling insights through secure aggregation at the gateway. This balance is a core driver of many edge-first strategies in regulated industries such as healthcare, finance, and critical infrastructure.

Security at the edge also demands rigorous measures: device hardening, secure boot, trusted execution environments, encryption in transit, and robust identity and access management. A well-designed edge-to-cloud architecture uses consistent security policies, timely updates, and regular vulnerability testing to prevent the expansion of the attack surface. Data sovereignty and security thus become governance challenges as much as technical ones, requiring ongoing risk assessment and disciplined change management.

Cloud Computing Differences: Scale, Resilience, and Global Access

Cloud computing differences revolve around scale, resilience, and global reach. Centralized cloud platforms offer elastic compute, large-scale storage, managed services, and enterprise-grade governance across regions. They enable rapid experimentation, AI/ML model deployment, and standardized operations for diverse workloads, making them a natural fit for long-running analytics, batch processing, and cross-border collaboration. When comparing Edge vs Cloud, the cloud excels at capacity, consistency, and global availability.

However, the cloud is not a one-size-fits-all solution. Cloud computing differences must be weighed against latency sensitivity, bandwidth constraints, and edge autonomy. Many organizations adopt a hybrid approach, reserving cloud power for insights, orchestration, and archival storage while keeping real-time processing near the data source. This balance preserves the strengths of cloud-native services while maintaining edge responsiveness and compliance with data location requirements.

Edge-to-Cloud Migration and Governance: A Practical Framework for Modern Infrastructures

Migration planning for edge-to-cloud deployments begins with mapping workloads to outcomes and identifying which tasks benefit from local processing versus centralized analysis. Start with non-critical workloads that tolerate occasional latency and progressively migrate more demanding tasks to the edge as confidence and tooling mature. An incremental approach reduces risk while establishing the architectural patterns needed for scalable, distributed systems.

Governance, security, and automation are essential to sustaining an efficient Edge-to-Cloud model. Implement infrastructure as code, CI/CD pipelines for edge software, and centralized telemetry to maintain consistency across sites. Define data synchronization rules, conflict resolution strategies, and policy enforcement so edge and cloud components operate as a cohesive whole. In practice, a well-governed hybrid architecture delivers predictable performance, lower risk, and clearer ROI.

Frequently Asked Questions

Edge Computing vs Cloud: How does latency reduction with edge computing influence where you run workloads?

Latency budgets drive Edge Computing vs Cloud decisions. For workloads requiring sub-second responses, latency reduction with edge computing makes edge deployment the preferred choice, with local data preprocessing and real-time inference. The cloud remains ideal for large-scale analytics and long-term storage, so many organizations adopt a hybrid cloud strategy to balance both.

How does a hybrid cloud strategy guide Edge Computing vs Cloud decisions for real-time and batch workloads?

A hybrid cloud strategy supports Edge Computing vs Cloud by placing time-sensitive tasks at the edge while using cloud resources for scale and analytics. This approach provides flexibility, reduces risk, and helps manage data gravity, bandwidth, and governance. Define clear interfaces and data pipelines so edge and cloud components work in concert.

What are cloud computing differences that drive when to choose Edge Computing vs Cloud?

Cloud computing differences include elastic scalability, global availability, and access to mature cloud-native services. Edge computing brings proximity, offline capability, and local decision-making. In Edge Computing vs Cloud, many workloads split: edge handles real-time processing, cloud handles analytics and archival storage, often coordinated through a hybrid setup.

How do data sovereignty and security concerns shape Edge Computing vs Cloud implementations?

Data sovereignty and security considerations influence Edge Computing vs Cloud choices by keeping sensitive data closer to the source to meet localization rules, while still requiring strong encryption, secure updates, and centralized governance where appropriate. Plan device hardening, end-to-end encryption, and monitored data synchronization across edge and cloud.

What are edge computing advantages compared with cloud services, and when should you favor Edge Computing vs Cloud?

Edge computing advantages include latency reduction, bandwidth savings, and local autonomy, especially in constrained networks. However, it adds operational complexity. When comparing Edge Computing vs Cloud, use edge for real-time control and the cloud for analytics and long-term storage within a hybrid strategy.

When should you adopt an edge-first vs cloud-first approach within Edge Computing vs Cloud, and how does a hybrid cloud strategy help?

When deciding between edge-first and cloud-first within Edge Computing vs Cloud, use criteria such as latency budgets, connectivity, regulatory requirements, risk tolerance, and total cost of ownership. Start with non-critical workloads, run parallel pilots, and gradually shift more workloads to the edge as confidence grows, all within a hybrid cloud strategy that coordinates edge and cloud resources.

| Topic | Edge Computing | Cloud |

|---|---|---|

| Definition | Processing data near source (local devices, gateways, or edge servers) to reduce latency and enable real-time responsiveness, even when connectivity to central data centers is intermittent. | Centralized processing in data centers or managed cloud environments offering elasticity, centralized governance, broad AI/ML capabilities, and simplified management for large, diverse workloads. |

| Core Advantage | Latency-sensitive, bandwidth-constrained scenarios; pre-process data locally to shorten response times. | Elastic compute, centralized governance, scalable for large, diverse workloads; broad AI/ML capabilities. |

| Hybrid Approach | Edge-to-cloud continuum blending on-premises and cloud; synchronize data/insights across edge and cloud. | Centralized coordination and analytics; long-term storage and enterprise governance; cloud-native services. |

| Latency & Bandwidth | Latency reduction; local data processing; minimize data sent to cloud. | Supports heavy processing and bulk data transfers; suitable for non-latency-critical tasks. |

| Security & Data Sovereignty | Keep sensitive data local; easier localization; requires robust edge security. | Centralized security policies; scalable governance; potential broader attack surface; data residency varies. |

| When to Choose | Edge-first for ultra-low latency or offline operation. | Cloud-first for rapid scale, global availability, and access to cloud-native services; hybrid often best. |

| Use Cases | Predictive maintenance, real-time monitoring, smart manufacturing, offline retail. | Enterprise analytics, AI model training, cross-site orchestration; cloud supports long-term storage. |

| Architecture & Data Strategy | Edge devices, gateways, regional data centers; decide what data stays at edge, what is summarized, what goes to cloud; define synchronization rules. | Central cloud services; data lakes, analytics; cross-region governance; data synchronization with edge. |

| Migration & Implementation | Incremental adoption; start with non-critical workloads; parallel experiments; automation; telemetry. | Infrastructure as Code, CI/CD for cloud and edge; telemetry; governance; phased migration. |

| Security Focus | Device authentication, secure firmware updates, encryption in transit, least-privilege access. | Centralized security updates, encryption, access control; governance; monitoring. |

| Looking Ahead | 5G, AI at the edge, distributed AI; edge runtimes and accelerators. | Cloud-native services evolve; tighter edge orchestration; coordinated governance. |

Summary

Edge Computing vs Cloud presents a nuanced continuum rather than a binary choice, guiding organizations to place workloads where they perform best while aligning with security, cost, and governance needs. A hybrid approach is often the practical path, blending edge and cloud resources to reduce latency for real-time tasks and to scale heavy analytics in centralized environments. Use a decision framework that maps workloads to latency budgets, data sovereignty, bandwidth, risk, and total cost of ownership. Start small with non-critical workloads and gradually migrate to the edge or cloud as requirements evolve, using automation and IaC to maintain consistency. Across industries, edge use cases such as predictive maintenance, real-time monitoring, and offline capability demonstrate tangible benefits, while cloud capabilities enable enterprise analytics, AI model development, and global orchestration. Looking ahead, advances in 5G, AI at the edge, and tighter cloud-edge integration will continue shaping Edge Computing vs Cloud strategies, underscoring the need for a flexible, governance-driven hybrid architecture that adapts to changing workloads and regulatory landscapes.