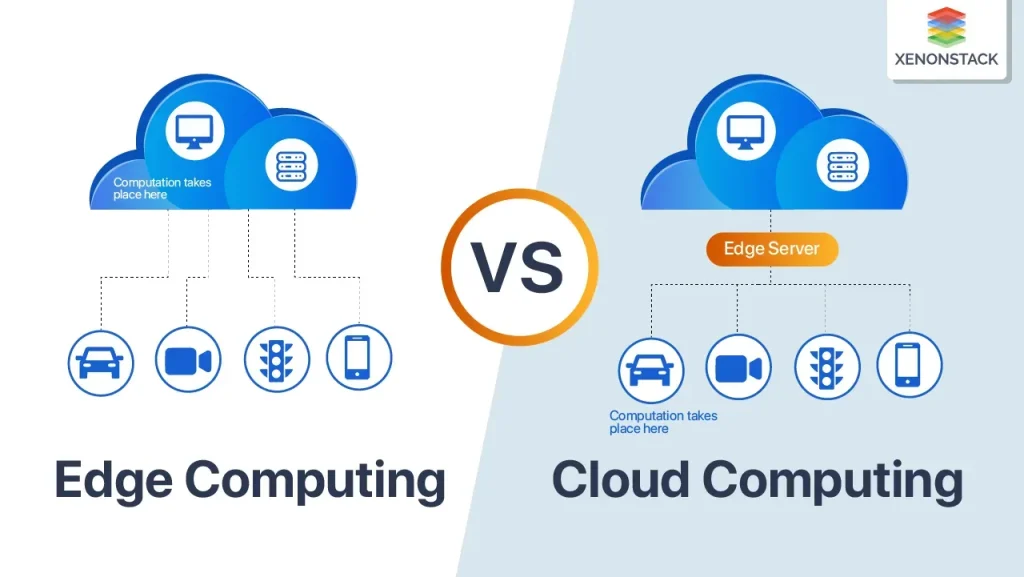

Cloud and edge computing are reshaping how organizations store, process, and analyze data by bringing intelligence closer to the points of action and by enabling new forms of collaboration across teams. This shift unlocks edge computing benefits that translate into faster insights, improved resilience, and the ability to act on real-time signals without waiting for distant cloud round-trips. By blending private on-premises resources with scalable public services, businesses can tailor workloads to sensitivity, compliance, and performance needs while staying adaptable as requirements evolve. Modern platforms, advanced networking, and policy-driven governance help ensure that critical tasks run where they are most effective, reducing risk while expanding opportunities for innovation. Together, these capabilities empower IT and business units to move faster, improve customer experiences, and maintain control across a broader, more dynamic technology landscape, while scalable security and auditable data flows support resilience.

A closer look reveals a convergence between centralized cloud services and local computing, where decisions occur nearer to data sources and away from the latency of distant servers. Rather than seeing cloud and edge as separate domains, organizations are pursuing integrated architectures that emphasize edge proximity, data locality, and intelligent orchestration to optimize workloads. This shift brings design patterns such as tiered processing, fog layers, and event-driven microservices that coordinate across devices, gateways, and core platforms. As teams rethink governance, security, and data stewardship, they focus on end-to-end observability, policy-driven risk management, and resilient operations across distributed resources.

Cloud and Edge Computing: Building a Unified Architecture for Speed, Security, and Scale

The evolution to distributed IT infrastructure centers on harmonizing centralized cloud resources with decentralized edge capabilities. A unified architecture enables orchestrating workloads across cloud and edge, aligning data gravity and compute proximity with business goals. This holistic view is a cornerstone of modern hybrid cloud strategies, delivering scalable resources while preserving localized responsiveness.

In practice, designing such an architecture means defining data governance policies, choosing where data is processed, and enabling seamless data flow between edge nodes and centralized services. The result is a distributed IT infrastructure that supports governance, security, and performance at enterprise scale.

Edge Data Processing: Local Compute for Faster Insights and Better Privacy

Edge data processing moves filtering, aggregation, and inference to or near the data source, dramatically reducing round-trips to the cloud and cutting latency. This is a core pillar of edge computing benefits, enabling immediate feedback in manufacturing, healthcare, and consumer devices.

By processing data at the edge, organizations can save bandwidth and improve resilience while still leveraging cloud analytics when needed. This approach supports privacy-preserving workflows by limiting the data that leaves the source, aligning with overarching data governance goals.

Hybrid Cloud Strategies: The Blueprint for Balancing On-Premises, Public Cloud, and Edge

A hybrid cloud strategies approach blends private clouds or on-premises data centers with public cloud services, allowing workloads to move based on latency, cost, and compliance considerations. In this pattern, edge components tackle latency-sensitive tasks while central clouds provide scalable analytics and long-term storage.

Effective hybrid cloud strategies require clear data classification, policy-driven routing, and robust security. They enable smarter distribution of workloads across distributed IT infrastructure and ensure governance across geographic and regulatory boundaries.

Latency Reduction and Real-Time Analytics at the Edge

Latency reduction is a primary driver for edge deployments, enabling real-time analytics, near-immediate feedback, and autonomous control loops. Edge nodes can run inference models, sensor fusion, and rule-based actions without cloud round-trips.

Real-world scenarios include industrial IoT and smart cities where immediate decisions matter. The cloud serves as a partner for historical analysis and model training, but the edge handles live decision making to speed outcomes.

Security, Governance, and Compliance in a Distributed IT Infrastructure

Security must span both cloud and edge layers, with strong identity and access management, encryption in transit and at rest, and hardware-backed protections where applicable. A distributed IT infrastructure raises the attack surface, making consistent policy enforcement essential.

Governance and compliance require clear data ownership, lifecycle management, and data lineage across nodes. Implementing data residency controls and cross-border data flow policies ensures regulatory alignment while enabling collaboration across locations.

Organizational Readiness: Observability, Collaboration, and Automation in Cloud-Edge Deployments

Transitioning to cloud and edge deployments demands new operating models. Cross-functional teams—cloud architects, edge engineers, security specialists, network professionals, and data scientists—must collaborate to deliver end-to-end performance. Observability, with unified telemetry and dashboards, is critical for detecting anomalies across distributed components.

Automation and intelligent orchestration enable dynamic workload placement, scaling, and fault recovery. AI-assisted management tools help optimize resource use across the hybrid environment, reducing manual toil and accelerating time-to-value.

Frequently Asked Questions

What are the key cloud and edge computing benefits when implementing hybrid cloud strategies?

Cloud and edge computing deliver faster insights by distributing compute tasks closer to data sources as part of hybrid cloud strategies. The edge enables real-time analytics and edge data processing, while the cloud provides scalable storage and long-running services. This combination reduces latency, optimizes bandwidth, and improves resilience and governance across distributed IT infrastructure.

How does edge data processing support latency reduction in a distributed IT infrastructure?

Edge data processing brings computation to the source, enabling near-instant responses and reducing round-trips to centralized clouds. In a distributed IT infrastructure, this lowers latency, saves bandwidth, and offloads duty from core data centers. Use cases include real-time monitoring, immediate control loops, and privacy-preserving workflows.

Why is latency reduction critical in cloud and edge computing architectures, and how can it be achieved?

Latency reduction is essential for real-time decision-making and user experiences in cloud and edge computing. Achieve it through edge data processing, edge nodes close to data sources, and hybrid cloud strategies that route latency-sensitive workloads to the edge. Combining fog or microservices patterns with robust orchestration helps sustain responsiveness.

What role does the edge play in a distributed IT infrastructure for real-time analytics and resilience?

The edge provides localized compute and storage, enabling fast analytics and immediate responses where data is produced. In a distributed IT infrastructure, edge computing reduces backhaul, lowers latency, and supports resilience by keeping critical work near the source. Cloud services then aggregate results for deeper insights.

How do hybrid cloud strategies balance data sovereignty and performance in cloud and edge computing deployments?

Hybrid cloud strategies place sensitive or compliant workloads in private clouds or on-premises, while non-sensitive tasks run in the public cloud, preserving performance and cost efficiency. Edge computing further helps by processing data locally, supporting data sovereignty and latency reduction. This approach delivers faster responses and governance across distributed environments.

What patterns optimize edge data processing within a cloud and edge computing environment?

Key patterns include centralized cloud with regional edge nodes, where the cloud handles long-term storage and models and edge nodes perform local processing. Fog computing and microservices enable data aggregation and scalable, event-driven workflows across cloud and edge. Proper data classification and routing ensure data is processed at the edge when needed and synchronized with cloud analytics.

| Aspect | Key Points |

|---|---|

| What Cloud and Edge Computing Are | A coordinated architecture that blends centralized cloud services with edge processing to enable near real-time insights and resilience. |

| Edge advantages | Reduces latency by processing near the data source; saves bandwidth; enables real-time analytics for latency-sensitive scenarios (e.g., autonomous systems, industrial automation). |

| Hybrid Cloud Paradigm | Keeps sensitive data on private/on-premises infrastructure while offloading scalable tasks to the public cloud; edge handles latency-critical processing with feedback to cloud for analytics. |

| Architectural Patterns | Centralized cloud with regional edge nodes; Fog computing as an intermediate layer; Microservices and event-driven architectures across cloud and edge; Data classification and routing. |

| Edge Data Processing in Practice | Performs filtering, aggregation, and inference at or near the source to reduce latency, costs, and improve user experience (Industrial IoT, Smart cities, Retail/Media, Healthcare). |

| Security, Governance, and Compliance | Strong IAM across nodes; secure edge processing; governance for data ownership and lifecycle; incident response; regulatory compliance and data residency considerations. |

| Operational and Organizational Implications | Cross-functional teams spanning cloud, edge, security, and data science; observability with centralized telemetry and edge dashboards; unified alerting. |

| Future Trends | AI/ML at the edge; sovereign clouds and data fabric; 5G and beyond; automation and intelligent orchestration across hybrid environments. |

| Choosing the Right Path | Map workloads to business value, assess data gravity and latency, consider regulatory constraints, and implement a phased plan that migrates workloads between edge and cloud as needed. |

Summary

Cloud and edge computing represent a powerful evolution in technology infrastructure. They enable organizations to deliver low-latency experiences, optimize bandwidth, and scale operations with greater agility. By embracing hybrid cloud strategies and implementing robust edge data processing, businesses can unlock new capabilities—ranging from real-time automation to personalized services—while maintaining governance and security. The journey toward a distributed IT landscape is ongoing, but with clear goals, thoughtful architecture, and a focus on interoperability, organizations can capitalize on the strengths of both cloud and edge computing to drive innovation and competitive advantage.